Free payslip template for small businesses

Create a professional payslip in minutes

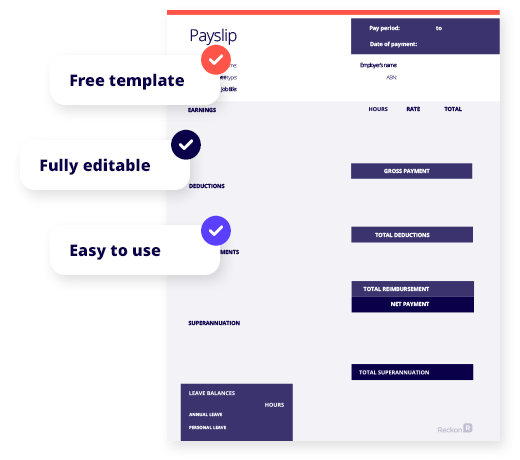

Free template

Our Australian payslip template can save you time & money.

Fully editable

Ready to customise with all requirements like earnings, deductions, reimbursements and superannuation.

Easy to use

Download your free payslip template

Payslip template

Use our free payslip template for small businesses. The payslip creator is a fully editable & customisable document that contains all the Australian legal requirements to help you save time on payroll compliance. Simply add in your employee payroll details!

Why choose software over a payslip template?

Up your payroll game with Reckon’s payroll software and manage wages, leave, super and Single Touch Payroll for your employees from just $12/month.

Payroll that’s designed for small business

A modern design and clear workflows make managing pay runs, wages, leave, superannuation, Single Touch Payroll and data entry a breeze.

Simply enter your default settings, add employee details and hit pay! Save time is one of the many benefits of having payroll software.

Fair and affordable payroll software pricing

Easily manage wages, leave, super and STP for up to 4 employees from just $12/month with our payroll software. As your business grows our pricing scales fairly with your business size making it an affordable option for Aussie small businesses that require payroll system.

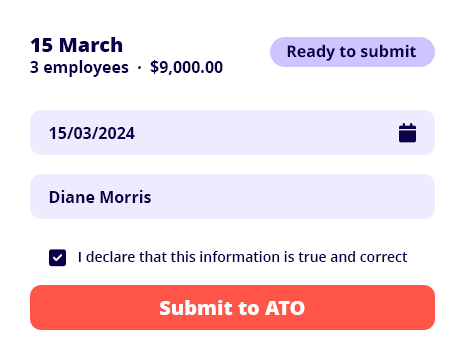

STP Phase 2 and ATO compliant

Process pay runs & send your STP reports directly to the ATO each payday in just a click. You can even keep track of ATO messages and access past submissions.

We’ve processed 500,000+ successful STP reports for our customers in 2023 alone!

Stay up to date with payroll & tax regulations

It’s simple to stay up to date with the latest payroll and tax compliance changes with updates such as PAYG tax tables, superannuation guarantee rates and SuperStream requirements automatically pushed into Reckon payroll software.

Leaving you more time to focus on running your business.

Aussie payroll software with local support

Our cloud payroll software is designed and built for the Australian market with local support available when you need it.

Reckon is a proud Australian, publicly listed company with over 30 years of experience in the industry - so your payroll management is in safe hands!

Reckon Payroll software vs free payslip template

Features

Free Payslip Template

Automatically email payslips

Manage Single Touch Payroll

Easily share online with your employees

Send directly from your mobile

Schedule pay runs

Automatic payroll and compliance updates

Free downloadable templates

Invoice template

Free & customisable tax invoice for your small business.

Payslip template

Free & editable payslip template for Aussie small businesses.

Business plan

Free editable business plan template to build out your business strategy.

Cashflow forecast template

Free cashflow forecast template for small businesses.

Balance sheet template

Free & customisable balance sheet for your small business.

Profit & Loss template

Free & editable profit & loss template for Aussie small businesses.

Cashflow statement template

Free cashflow statement template for small businesses.

Quote template

Free quote template for

small businesses.

Business continuity plan template

Free continuity plan template for small businesses.

Frequently asked questions

What is a payslip?

A payslip is a document that records an employee’s income earned over the pay period. Broken down into 3 key areas a payslip will include gross pay, tax & deductions and net pay which is the amount the employee actually receives. Payslips help employees keep track of payments and deductions and ensure they are being paid correctly. As an employer, you need to provide your employees with a payslip at every pay run via email or a physical paper copy.

What should a payslip template include?

A payslip must include the following information:

- the amount of pay, both gross (before tax) and net (after tax);

- the date of receiving the pay;

- the pay period;

- any loadings, bonuses or penalty rate entitlements;

- deductions;

- superannuation contributions including the name of the super fund;

- the employer’s name and ABN if they have one; and

- the employee’s name.

If employees are paid at an hourly rate, the payslip should also contain the employee’s ordinary hourly rate and how many hours they worked at that rate. If an employee is paid an annual salary, the rate should be as on the last day of the pay period. While this is not a legal requirement, it is best practice to include the balance on each payslip.

When do I need to provide a payslip?

As an employer you must provide payslips within one business day after your employee receives their pay.

How long do I need to keep payroll information?

As an employer you must keep your employee information, timesheets and payroll information for 7 years. See Fair Work Ombundsmen website for more information.

Is there a payslip format I should follow?

There is no specific format that your payslip should follow as long as it contains all the legal requirements. However, there is a level of commonality between most payslips that most people recognise and are familiar with. It makes sense to keep your chosen format similar to these known conventions e.g employer name at the top, gross pay before net pay etc as will help your employees understand their payslips easily.

How do I create a payslip?

A payslip is generated from your payroll & employee information.

You can use our free payslip template to create a payslip manually, however, one of the easiest ways is to generate your payslips automatically is via your payroll solution.

Need payroll software? Check out Reckon’s payroll software for small business!

Do I need payslips if I report under STP?

Yes, you still must provide your employees’ payslips even if you are reporting via STP.

However, the STP Finalisation Declaration has replaced the need to produce Payment Summaries for employees. The information that was provided on the Payment Summaries is available in the employees’ MyGov account and is known as an Income Statement. If an employee doesn’t have a MyGov account, their accountant will be able to access this information via the Tax Agent portal.

Is your payslip generator STP compliant?

Sending your employees payslips is only a small part of your payroll compliance obligations as an employer. You are also required to comply with specific rules from the ATO & Fair Work Australia etc. One of these obligations relates to Single Touch Payroll. Once you’ve processed your pay run you still need to submit the payroll information to the ATO via an STP-enabled software solution. For more information on STP-compliant products visit our range of payroll solutions here.

Try Reckon Payroll today!

30 days free. Cancel anytime.