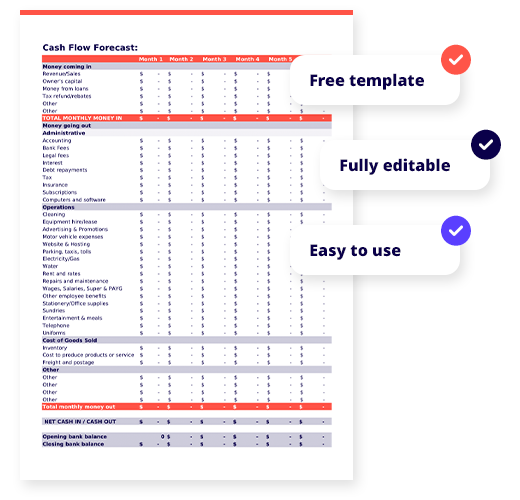

Free cash flow forecast template for small businesses

Create a cash flow forecast in minutes

Free template

Fully editable

Easy to use

Download your free cash flow forecast template

Cash flow forecast template

Free downloadable templates

Invoice template

Free & customisable tax invoice for your small business.

Payslip template

Free & editable payslip template for Aussie small businesses.

Business plan

Free editable business plan template to build out your business strategy.

Cashflow forecast template

Free cashflow forecast template for small businesses.

Balance sheet template

Free & customisable balance sheet for your small business.

Profit & Loss template

Free & editable profit & loss template for Aussie small businesses.

Cashflow statement template

Free cashflow statement template for small businesses.

Quote template

Free quote template for

small businesses.

Business continuity plan template

Free continuity plan template for small businesses.

Frequently asked questions

What is a cash flow forecast?

A cash flow forecast is the most important business tool for every business. The forecast will tell you if your business will have enough cash to run the business or pay to expand it. Understanding cash flow is crucial for maintaining financial health and planning for the future. To learn more about the concept of cash flow and how it affects your business, check out our detailed explanation on Cash Flow.

Benefits of cash flow forecasting

How to use the cash flow forecast template

Our cash flow forecast template is a fully editable & customisable excel document that allows you to better map out your future sales and expenses for your business. Simply download the template and fill out the money coming in section, followed by entering in your monthly expenses to accurately calculate the health of your cash flow!