Free business plan template for small businesses

Create your free professional business plan with our easy-to-use business plan template.

Create a professional business plan in minutes

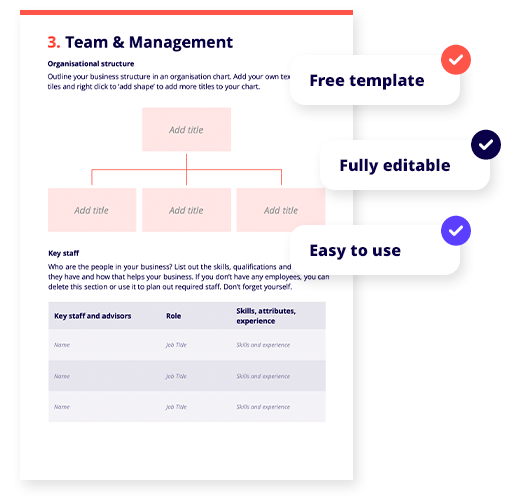

Free template

Our Australian business plan template can save you time & money.

Fully editable

Ready to customise with all requirements like your business details, organisational structure, competitor analaysis and goals.

Easy to use

Simply add the requested data and our template will create a business plan for you.

Download your free business plan template

Business plan template

Our business plan template is an editable document containing your businesses goals and objectives for the future. It also provides all the key sections you need for a professional business plan including overview of business description, market research, competitive analysis, financial forecasts and much more.

Free downloadable templates

Invoice template

Free & customisable tax invoice for your small business.

Payslip template

Free & editable payslip template for Aussie small businesses.

Business plan

Free editable business plan template to build out your business strategy.

Cashflow forecast template

Free cashflow forecast template for small businesses.

Balance sheet template

Free & customisable balance sheet for your small business.

Profit & Loss template

Free & editable profit & loss template for Aussie small businesses.

Cashflow statement template

Free cashflow statement template for small businesses.

Quote template

Free quote template for

small businesses.

Business continuity plan template

Free continuity plan template for small businesses.

Frequently asked questions

How do you write a business plan?

Writing a multi page business plan when you are just starting out can seem overwhelming. The key to getting started is to keep it simple and add to it as you grow. Start with key headings (or use our template) and some bullet points mapping out your business overview, vision, market analysis and financial forecasts. For a more detailed step-by-step approach, check out our comprehensive guide on How to Write a Business Plan.

What is the importance of a business plan?

Writing a business plan gives your business the best chance of success helping you to flesh out your business proposal, outline key business processes and gives an action plan of what you want to achieve over time. While it can seem overwhelming, the time and effort you put in is worth it for your long-term success. Use our free business plan template below to get you started!

What should a business plan include?

A business plan consists of a single document with different sections that represent different aspects of your business. Most business plans include the following:

- Business overview

- Executive summary

- Team & Management

- Product & Services

- Operations

- Market Analysis

- Competitor Analysis

- Marketing & Promotions

- Financial Analysis

- Future & Goals

Tips for writing a business plan?

Check out our page about the 7 Tips for writing a Business Plan >