When making a GST claim for business expenses, there are several intricacies and responsibilities you need to take into account. It’s prudent to be aware of your rights as a small business owner when approaching the topic of a GST refund.

If you’re a small business owner or sole trader registered for (and charging) a goods and services tax, are you aware of GST credits (input tax credits)? Do you know how to calculate GST and how to claim it?

If you don’t know or you aren’t sure, you’ve come to the right place.

How to claim GST

Claiming GST or a GST credit (otherwise known as an input tax credit) is a tax credit you can claim for the GST portion of your business-related expenses.

Essentially, if you’re a business owner or sole trader registered for GST, and you purchase goods and services for your business, you may be eligible to claim credits for the GST you paid on those business related goods and services.

The credit will be applied by the Australian Taxation Office, but only for the GST portion of the business expenses.

A GST credit can be claimed by your business on your Business Activity Statements sent to the ATO. It’s essentially a reimbursement for the GST you paid and will be factored into the tax your small business pays.

Claiming GST on business expenses

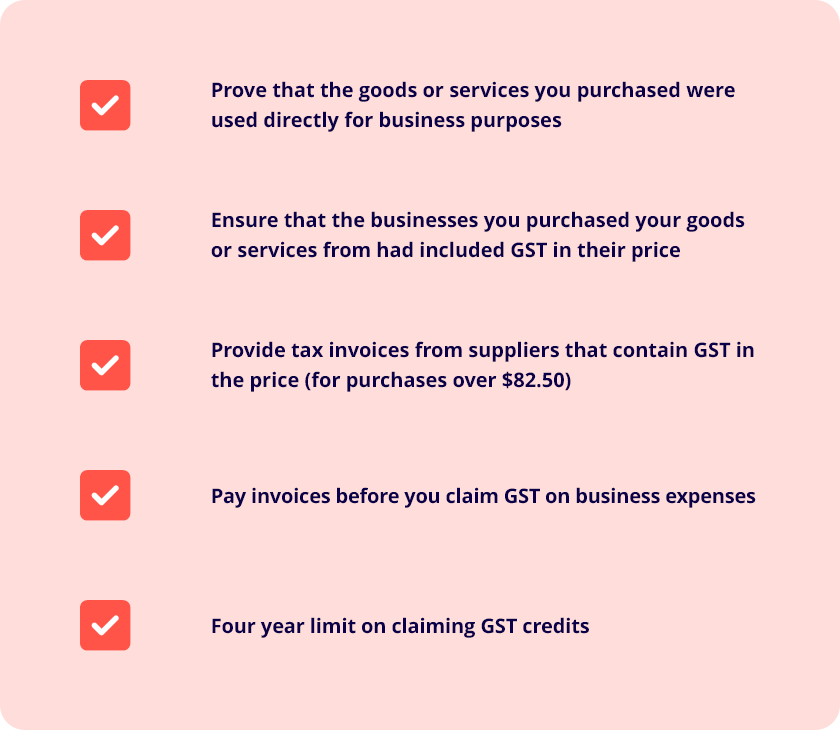

Are you constantly wondering ‘As a small business what can I claim for?’ There are many expenses you can claim for, but there are steps you need to take:

- In claiming GST credits, your small business will need to prove that the goods or services you purchased were used directly for business purposes, like any business asset you claim for (such as the instant asset write-off).

- You’ll need to ensure that the businesses you purchased your goods or services from had included GST in their price (check the invoice or receipt) and ensure that they were registered for GST. If you’re unsure whether your suppliers are registered for GST, you can check here.

- You’ll need to provide tax invoices from your suppliers that contain GST in the price. A tax invoice is required for purchases over A$82.50.

- You need to have paid the invoices, or are liable to pay them before you claim GST on business expenses.

- You’ll have a four year limit on claiming GST credits, giving you a long grace period to process and receive your credit.

When can a small business claim GST?

GST credits for purchased business expenses can be claimed through your quarterly BAS statement submitted to the ATO.

A GST claim for business means that if you bought a desk for work and the GST portion of the price of the desk was $50, then you can claim a GST credit for the $50 as a deduction from your income tax.

When is a small business unable to claim GST?

- Your small business will be unable to claim GST credits when you have purchased any business-related expenses from suppliers who aren’t registered for GST.

For example, those suppliers may be operating under the GST threshold. So, be sure to scrutinise such purchases, especially from sole traders who may have lower levels of income and may not be required to register for, or charge, GST. - When your own business is not registered for GST.

- You cannot claim GST credits for purchases that don’t have GST in the price. Examples include basic food, bank fees, and residential accommodation.

- You cannot claim for wages you pay to staff (there’s no GST on wages).

- You also cannot claim GST credits if you don’t possess a tax invoice for purchases that are over A$82.50 (including GST).

What is GST refundable?

As touched upon, almost any business purchase that included GST can be refunded by the ATO. These are called input tax credits.

Here are some of the finer details around claiming a GST refund:

- The easiest way to track your progress when claiming GST is to put all of your transactions through your accounting software. Once this is relayed to the ATO, the GST refund should be clear. Talk to your advisor for further advice here.

- If a customer leaves you with a bad debt, where you have paid the GST already on expected income, yet they don’t pay in time, you can process a GST claim back for this. You’ll then pay the GST back later, when the invoice is settled.

- You can claim a GST refund on the portion of an item you purchased for both work and personal use. In this instance you can split the item (40% personal, 60% business related) and claim a GST refund for the business portion.

- You can claim GST on GST-inclusive purchases, even if the end product you sell is GST free.

How do I get my GST refund?

To get a GST refund for business, simply submit your complete BAS statement to the ATO, preferably through your accounting software (or relying on your bookkeeper) to see what kind of GST refund you’ll receive.

Ensure you keep records of all business-related purchases and invoices to back up your refund claims if necessary.

If you require further information on claiming GST credits, please contact your financial advisor. If you don’t have one, use our free tool to discover one in your area.

You can also find official information from the ATO about GST credits and the effect on your taxable income on their website here.