Although business forecasting is most certainly based on quantitative data, historical performance, solid reporting, and a reasonable appraisal of current conditions, one could easily argue that business forecasting is as much of an art as a science.

As a bookkeeper or advisor, being able to predict what might be coming for a business is of the utmost importance for your clients – in fact, forecasting can form a very important service pillar in your business model.

To fend off uncertainty, make better decisions and stave off – or prepare for – potential disaster… any company worth its salt will benefit from business forecasting services.

The good news? As a trusted advisor, you may well be perfectly positioned to provide these services.

What is business forecasting?

By gathering enough historical data, a business forecast endeavours to accurately predict what’s in store for a business, over a future period.

Indeed, for bookkeepers, accountants, and business advisors, a large part of forecasting involves gathering and interpreting business performance data. Historical records detailing things like cash flow, expenses, sales, consumer behaviour, market shifts and previous budget determinations are collected and analysed for patterns.

Pattern identification is key to the art of business forecasting. Once a pattern is defined within a dataset, it’s possible to make relatively accurate predictions (with many caveats) on what may come next. From there, businesses can make more informed decisions on how to behave.

Why is business forecasting important?

As a business, flying blind and forging ahead without guidance is fraught with chance. No business owner in their right mind wants to navigate the future without some kind of idea as to what to expect ahead. This is why business forecasting is incredibly valuable.

Often, your business clients will know or decide exactly what they want to predict. They may be seeking answers to questions such as: “Will consumers want to pay $100 for this product?” or, “What will my profits look like next March?”

Predicting accurate answers to these questions helps to inform how a company can best act to remain profitable and achieve healthy growth or stability. And, the intrinsic value of business forecasting then becomes immediately clear.

How do you do business forecasting?

The processes behind forecasting are in constant flux. With the advent of big data, artificial intelligence, and new software tools… business forecasting services are becoming easier to deliver and more accurate. Accounting software reports often form a good portion of the data you can draw predictions from. Plus, there’s a vast cross-spread of software and financial modelling tools that will be of great service in this endeavour.

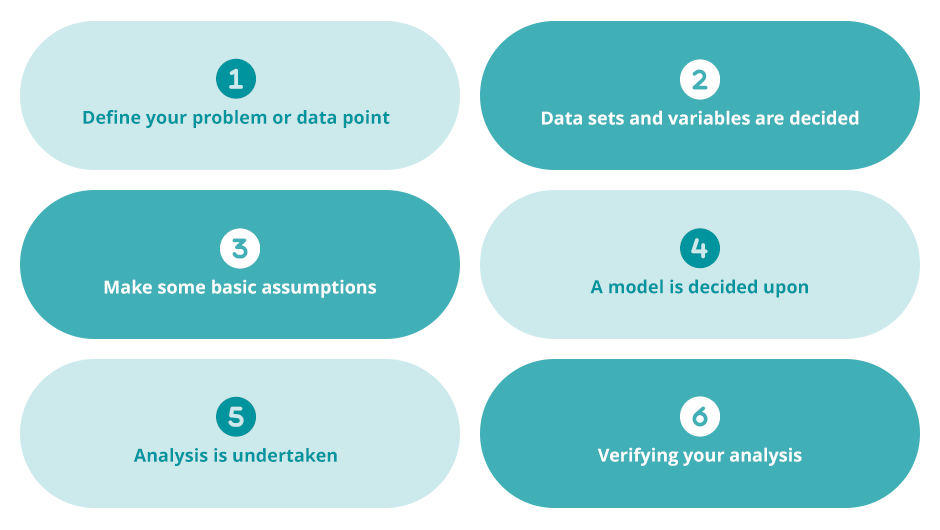

However, regardless of the exact software tools or methods you may use to form predictions, there’s a common theoretical business forecasting process:

1) Define your problem or data point

Decide on what it is you want to predict. This may be future sales, upcoming price changes or budgets.

2) Decide on data sets and variables

Choose which data sets will be used to underpin your prediction and what variables will be involved in your modelling.

3) Make some basic assumptions

To save time and data crunching, as a bookkeeper, accountant, or business advisor, you can often make some basic and reasonable assumptions about your client’s data.

4) Put forward a prediction model

You then need to decide on a prediction model that fits your data set and accounts for variables, along with your underlying assumptions.

5) Undertake analysis

It’s now time to analyse your data using your chosen model. From your analysis, you’ll arrive at a business forecast or prediction for your client.

6) Verify your forecast

Now that you have your prediction, you can test it over a short period to see how it pans out in reality. From here, of course, you can adjust accordingly.

While this is a basic business forecasting technique, there are certainly different ways of going about it, and a lot of complexities to the craft, once you get into the work.

Presenting the forecast to your client

When you arrive at your business forecast, you should use professional methods of presenting the data, (including the methods used), to your client.

Generating graphs, charts, and presentations to advise on actioning your insights will form the core of how you present the work.

Types of business forecasts

There are two primary business forecasting methods – qualitative and quantitative. Let’s unpack these two types of business forecasting.

Qualitative business forecasting

A qualitative prediction relies more heavily on the ‘expert’ factor. It involves looking at things like market research or consumer behaviour. Where solid data may be scarce – assumptions on future trends are made based on your experience and expertise.

Quantitative business forecasting

Quantitative forecasting is much less reliant on expertise, and much more based on solid data. When you remove the human factor, along with assumptions, and instead lean on past and present sales, expenses and cash flow data; your predictions will naturally be more reliable (although certainly not exempt from error and inaccuracy…)

Business forecasting only goes so far…

The issue with business forecasting is that nobody can predict future events with 100 per cent accuracy based on past data alone. When assumptions and qualitative methods are used, accuracy may decrease even further.

Even as a professional advisor or numbers expert, realising a perfect business forecast is rare. You may be equipped with the best data, most accurate tools, and a lot of experience, any forecast will likely be slightly off. Even completely off.

In this way, it’s important to inform a client seeking a forecast of the limitations of your service. You should never advise certainty in this regard.