If you are getting into business in Australia for the first time, taxation, tax law and GST can seem like assumed knowledge. Of course, it isn’t.

There is a lot to get your head around in the initial phases of a new business and goods and services taxation is one many will need to get to grips with. But it’s really not so daunting when you break it down.

What is GST? How much GST do I need to pay? How do I calculate GST? What is the GST threshold? What about GST on exports? How do I register for GST? Let’s find out.

When did GST start in Australia?

On July 1, 2000 the Goods and Services Tax (GST) was introduced to the Australian tax landscape and its arrival was a pivotal moment in Australia’s economic and taxation history.

By grouping disparate tax systems across goods and services, under the umbrella of GST, we found a simpler solution.

The idea behind GST is that anytime you pay for a product or service, a simple and standard tax is automatically applied to the payment.

How much is GST?

The GST rate is a flat tax of 10%. GST is applied to most goods, services and other items sold or consumed in Australia.

GST free products

There are a few exceptions to having to pay the Goods and Services Tax in Australia. These can be described as necessities. Basic foods and services such as healthcare and education are exempt from GST.

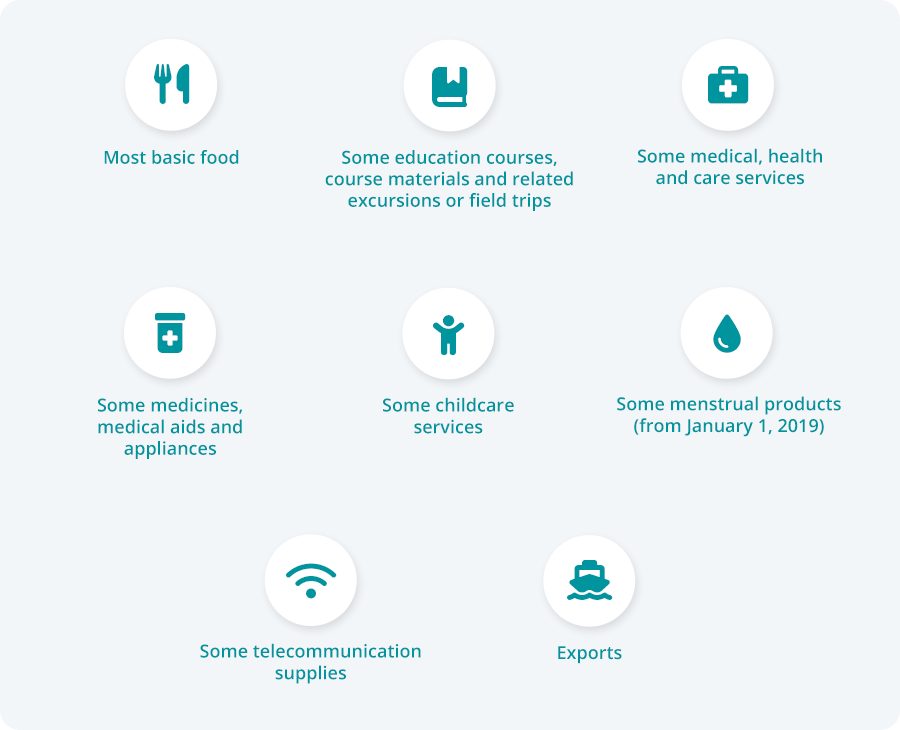

Examples of GST free services and products include:

- most basic food

- some education courses, course materials and related excursions or field trips

- some medical, health and care services

- some menstrual products (from January 1, 2019)

- some medicines, medical aids and appliances

- some childcare services

- exports

- some telecommunication supplies

What is the GST threshold?

For all Australian businesses and sole traders, you will need to start paying GST if your annual turnover is $75,000 or more. This requires registration with the Australian Taxation Office (ATO).

You must register for GST if your business operates in Australia and your income is over the $75,000 per annum turnover threshold. If your Australian business looks likely to exceed the GST threshold, you should pre-emptively register for GST.

If you miscalculated your earnings, you must register to pay GST within 21 days.

How much GST do I have to pay?

If you are a business or sole trader, how much GST you have to pay depends on a few factors, mostly income. As we know, GST is a tax payment of 10% on most Australian goods and services.

You will need to register for GST if:

- Your business, sole trader gig or enterprise has a GST turnover of $75,000 or more. Your GST turnover is the sum of the value of all taxable and GST-free supplies you have made, or are likely to make in the financial year.

- You provide taxi or limousine travel for passengers in exchange for a fare as part of your business, regardless of your GST turnover. This includes ride share businesses.

- You would like to claim fuel tax credits for your business, sole trader gig or enterprise.

What happens if your business does not fall into these categories? In this case, registering for GST is optional. However, if you choose to register and pay, you generally must stay registered for at least 12 months.

Registering for GST

If you have exceeded the GST threshold of $75,000, or believe you will, you will need to register your business entity with the ATO to pay this tax. You can register for GST on the ATO website.

GST on exports

Does your export business earn income in Australia? Do you have to pay the tax? No. Exports of goods and services to other countries are mostly GST-free.

What happens if you are registered for GST already? In this case, you essentially do not include GST in the price of your exports.

However, you can actually claim tax credits for the GST included in the cost of related purchases your business uses to make your soon-to-be exported goods and services.

Applying GST to invoices

If your business is registered for GST, you must provide tax invoices to your client or customer. Your tax invoice needs to include the GST amount for each item. Make sure to supply a tax invoice if any of these apply:

- The purchase is taxable.

- The purchase value of the invoice exceeds $82.50 (including GST).

- Your customer simply wants or asks for a tax invoice.

Note: If your customer or client wants a tax invoice and your business is not registered for GST, display this on your invoice. For example, you can state ‘no GST applied’.

Further GST information

If you require more information on your business’ tax obligations related to GST, please refer to the ATO website.