CASE STUDY

Scouts SA

Reckon makes financial reporting and complex multi-site bookkeeping a manageable affair

Simple and intuitive accounting software for community groups and not-for-profits.

Jacqui Wall, Branch Commissioner Finance Support Team Leader for Scouts SA, came to a headwall recently.

Responsible for the financial support for Scouts Groups in SA, her underlying mission was clear, as set out by the Scout’s ethos – to inspire young people to excel in life.

Yet to support a diverse range of young people achieve their goals while learning and growing together, there also needs to be financial accountability for each Scout Group, especially when funds are often raised through fundraising efforts in the community. This is where Jacqui steps in.

Tasked with the responsibility of coordinating a vast and disparate set of books and accounts, she required a modern and standardised process.

![]()

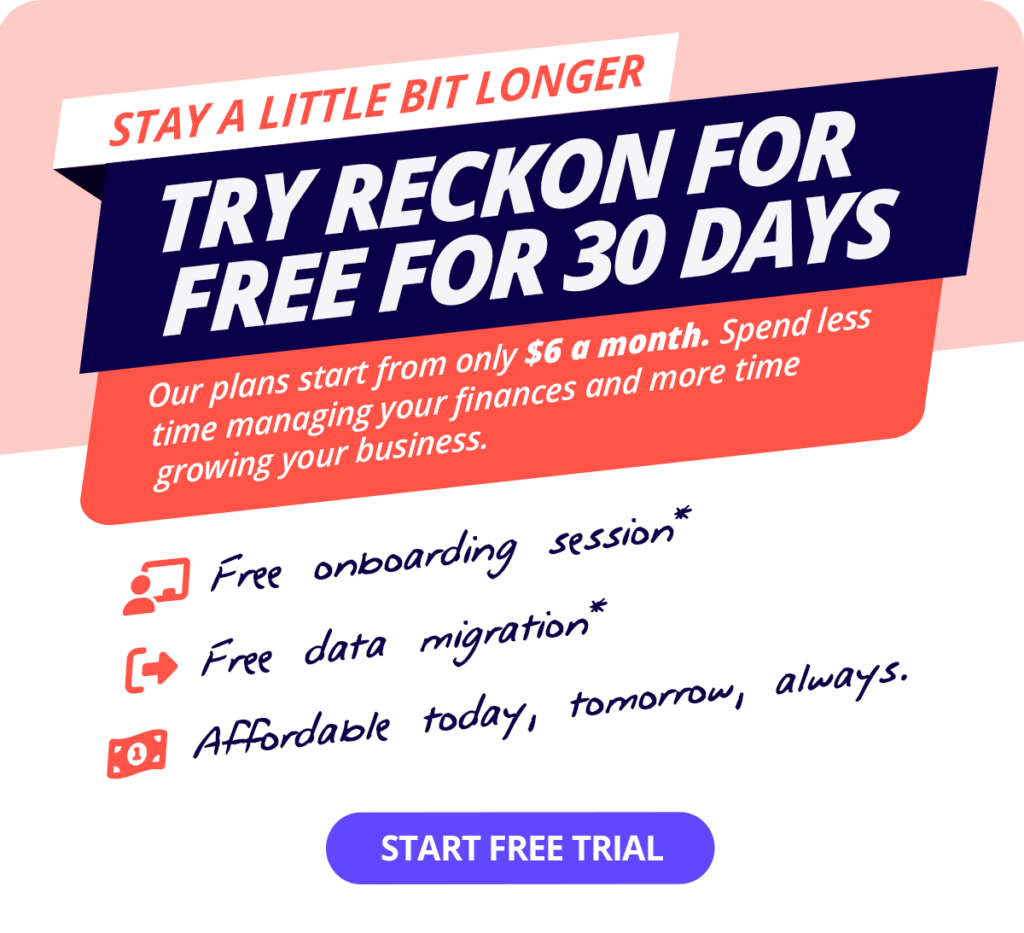

“Extensive research was undertaken to find an online commercial package that would suit the needs of our 94 Scout Groups, but would also be cost effective and not break the banks. Enter Reckon One.”

– Jacqui Wall, Branch Commissioner, Finance Support (Team Leader), Scouts SA.

THE CHALLENGE

Complex multi-site financial reporting poses a challenge

In SA, there’s a requirement for Scout Groups to have their finances reviewed annually, with results reported to the Scouts SA Head Office.

“This was always a challenge as many Scout Groups went out and sought qualified accountants or auditors to review their books which was never the intent and often cost money.”

As Jacqui tells us,

“There is a Finance Support Team here in South Australia who all have extensive bookkeeping/financial backgrounds and were available to review Scout Group’s finances and supply appropriate oversight and review to meet the requirements of Head Office.”

Yet these resources were not being taken advantage of.”

“The challenges became obvious when financial information was supplied in various formats – commercial accounting packages that had been purloined from home businesses, (and not necessarily transferrable at a later stage), Excel spreadsheets of varying states of expertise, beautifully presented folders with monthly dividers and copies of invoices and bank statements—right down to shoeboxes stuffed with screwed up bits of paper.”

Clearly, Scouts SA required a standardised method of financial reporting, which dispelled the shoebox accounting and diverse methodology, while also being modern, affordable, and easy to use.

Enter Reckon One…

“The visibility and accountability of finances across all areas of Scouting is of paramount importance.”

Success with Reckon One

If you look at the original Scout motto laid out by Robert Bayden-Powell, ‘Be Prepared’, you’ll see why Jacqui’s transitioning all 94 Scout Groups in SA to Reckon – they also need to be prepared financially.

To organise the Scout Group’s finances effectively, Jacqui chose Reckon One and set to work becoming accredited as a Cloud Advisor. She was then able to set up books for each Scout Group, standardising all financial reporting with tailored Charts of Accounts that can remain flexible to each Scout Group’s requirements.

“Currently, it is required that Scout Groups use the Basic Core Package along with the Invoicing Module as a minimum.

Once the transition to Reckon One is finalised in early 2023, it will make the review and reporting process so much easier.

Scout Groups will be using the same platform and, with the ability for the Finance Support Team to view the books in real time, the review process can be completed in a timely manner.

It is at the end of the day funds raised for the benefit of Scouting and needs to be recorded with the utmost care not only to protect our Members but also to comply with our Corporate Governance requirements.”

![]()

“The challenges became obvious when books were supplied in various formats – from Excel spreadsheets right down to shoeboxes stuffed with screwed up bits of paper.”

– Jacqui Wall, Scouts SA

About Scouts SA

Scouting is about contributing to the development of young people by helping them learn and grow, and to play a constructive role in a global society.

Fun, challenges, adventure and lifelong friendships await young people and adults who join Scouts SA.

Learning life skills, building resilience, growing in self-confidence and gaining valuable leadership and team skills are all part of the program.

Scouts SA provides fun youth development activities, building resilient and confident young people aged 5 to 25.