Accounting software for retail businesses

Spend less time on admin and more time on your business with our retail accounting software.

Why should you choose us?

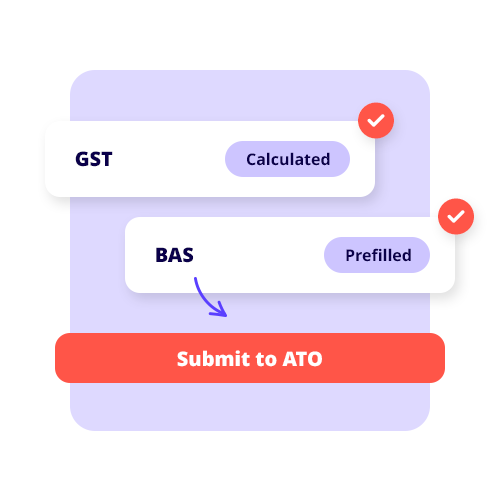

Take the hassle out of GST & compliance

Our accounting software for sole trader allows you to have automatic compliance updates for PAYG and GST, so it’s easy to process tax payments and keep up to date with the latest legislation. Plus, as a small business owner, you’ll always be working on the latest version to give you peace of mind and more time for doing what you love as sole trader.

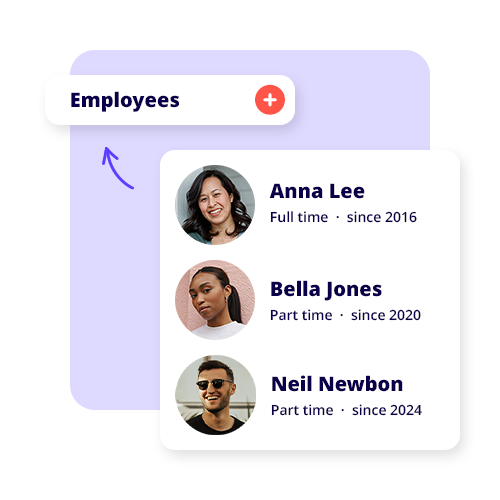

Easily manage your employees

Easily track time and expenses

Invoice on the go and get paid faster

![]()

“Life is busy, so having both businesses streamlined through Reckon is one less thing to think about.”

Amanda Stevens, Gravel & Grace

set-up reckon one

The right online accounting software for retail businesses

Many retail business owners set up eCommerce platforms on their websites, to broaden their audience and revenue streams. This was the case for Amanda Stevens, owner of Gravel & Grace boutique in Julia Creek Queensland.

After establishing and fitting out her clothing and lifestyle store front, she embarked on setting up eCommerce functionality on her website.

Learn more about Amanda’s journey with Reckon One here.

Related resources

Small business news wrap for August!

What’s been happening recently in the Australian business landscape? What are the top news stories through August 2023 that may affect your small business? Let’s do a quick recap to keep you up to speed…

Retailing mistakes you need to stop

We’ve identified five common mistakes that many retailers continue to make that could have major repercussions for your retail business.

Free downloadable templates

Explore our range of guides and easy-to-use templates for helping you running your business.

Plans that fit your business needs and your pocket

30-DAY FREE TRIAL + SAVE 50% FOR 6 MONTHS ON ALL PLANS.

Payroll Essentials

For businesses with up to 4 employees

$6/ month

Was $12

Save $36 over 6 months

Up to 4 employees**

Process pay runs

Email payslips

Manage Single Touch Payroll

Calculate superannuation

Track leave & entitlements

Payroll reporting

Payroll companion app

Employee facing app

Track timesheets

Employee expense claims

Free data migration††

Free onboarding session

Advanced reporting

Payroll Plus

For businesses with up to 10 employees

$12.50 / month

Was $25

Save $72 over 6 months

Up to 10 employees**

Process pay runs

Email payslips

Manage Single Touch Payroll

Calculate superannuation

Track leave & entitlements

Payroll reporting

Payroll companion app

Employee facing app

Track timesheets

Employee expense claims

Free data migration††

Free onboarding session

Advanced reporting

Payroll Premium

For larger businesses

$25/ month

Was $50

Save $150 over 6 months

Unlimited employees**

Process pay runs

Email payslips

Manage Single Touch Payroll

Calculate superannuation

Track leave & entitlements

Payroll reporting

Payroll companion app

Employee facing app

Track timesheets

Employee expense claims

Free data migration††

Free onboarding session

Advanced reporting

† Transactions that exceed the 1000 limit will be subject to the BankData Fair Use Policy.

†† Free data migration offer includes 1 year of historical data + YDT only. Paid subscriptions only.

Helping thousands of businesses with their accounting

Frequently asked questions

How does the 30-day free trial work?

The Reckon One free trial allows you to try our accounting software for a period of 30 days to ensure it meets the needs of your business. After this period, your subscription will automatically convert to a paid one to avoid any interruptions to your data. However, if you find that Reckon One small business invoicing software is not suitable for your needs, you can cancel your subscription before the billing renewal date and your credit card won’t be charged.

If life got in the way and you weren’t able to use your trial, no worries! Just give our friendly support team a shout and we’ll see if we can get you up and running again.

Can I change my software plan later on?

Definitely! Reckon One offers you the flexibility to change your plan to fit the unique needs of your business. Whether it’s downgrading or upgrading, you can easily make these changes right from your Reckon account.

How do I switch from another accounting software to Reckon One?

Making the switch to Reckon One from your current small business accounting software is a breeze with our data migration service!

Head to our data migration page and see how our free* migration service works.

What do I need to get set up with Reckon One?

There is no software installation required. All you need is a device with an internet connection to access your Reckon One account. Simply sign up for an account on our website and start using Reckon One to manage your business.

Is my data secure?

We use the best technology to ensure your data is safe and secure. Our accounting software for small business, Reckon One, is built with cutting-edge HTML5 technology and hosted on Australian servers powered by Amazon Web Services, a leader in cloud data storage.

Do you provide customer support?

We offer support through email, chat, and phone with our local team and resources such as webinars, a small business resource hub, and an online community to help you succeed from the moment you start using Reckon One.

Expert training is also available through the Reckon Training Academy or our trusted partners (accountants and bookkeepers).

Can I grant access to other people?

What are the advantages of using accounting software for small businesses?

Mobility

Online accounting software like Reckon One gives you the flexibility and mobility to manage your finances from any device. Gain clarity over your business’s financial position, automate your accounting process & reduce data entry, track business transactions, get paid faster, and more.

The latest version

Our small business accounting software Reckon One is automatically updated in the cloud. So you’ll always use the latest version without having to manually download compliance updates and accounting features.

Cost-effective

Reckon One pricing works on a SaaS (software as a service) pricing model. So you pay a low monthly fee instead of a large upfront payment for your software license. Paying month to month also means you aren’t locked into a contract and can cancel anytime.

Security

Your data is safely stored in the cloud so you won’t be affected by theft or accidents to physical hardware. All data servers have 24/7 security and several layers of encryption.

Is there a minimum subscription period?

Enjoy the benefits of Reckon One with the flexibility of monthly payments and if you decide it's not the right fit for your business, you can easily cancel at any time.

Try Reckon for free today

30-day free trial. Cancel at any time.