Looking for a Xero Payroll alternative?

Reckon is 82% cheaper than Xero for Payroll

Save money: Reckon’s payroll starts from $12/month, Xero starts at $70/month.

Get more features for less

Xero “Grow” plan vs Reckon “Payroll Essentials” plan.

1 employee only

Free phone support

$70.00

*This table is in comparison to Xero’s “Grow” plan, which includes payroll for 1 employee and Reckon’s ‘Payroll Essentials’ plan for up to 4 employees. Reckon’s unlimited free phone support is available during business hours. Data correct as of July 2024.

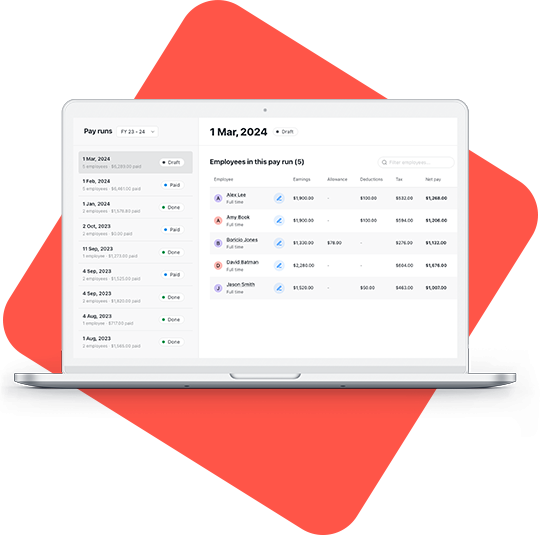

Better value as your business grows

Reckon's superior customer Support

Say goodbye to the hassle of logging tickets and three-day waits with Xero. At Reckon, we prioritise service to small business owners. Call our team of experts, with the majority of calls answered within 2 minutes!

We grow with you,

without the price tag

If your business scales to more than 4 employees, you must upgrade to Xero’s ‘Comprehensive’ plan ($90 for 5 employees) or Ultimate Plan ($115 for 10 employees). Reckon’s next tier is just $25/month for up to 10 employees, making it an affordable and risk-free option for a small business to scale.

Mobile companion payroll app

Reckon Payroll includes a mobile companion app, so you can manage Payroll on the go from anywhere! Download the app on iOS or Android for free as part of your Reckon Payroll subscription.

Migrate from Xero to Reckon for free

Moving your data from Xero to Reckon is quick and easy with our migration service. It’s free when you sign up for Reckon Plus or Premium plans.

Affordable payroll for all team sizes

You pay more for less support.

…while Reckon Payroll starts from just $12 /month with unlimited phone support.

There are a million reasons to love Reckon

Convinced Reckon Payroll is the perfect Xero alternative for small businesses?

Try Reckon Payroll for free, cancel anytime.