Looking for a Zoho alternative?

Get better bang for your buck with Reckon accounting software

Reckon One pricing starts from only $12/month, Zoho starts from $16.50!

Get more features for less

Zoho Books ‘Professional’ plan vs Reckon Accounting Plus + Payroll Essentials’ plan.

Send invoices

Track expenses

Pay runs & STP

Up to 4 employees

Automate super

Bank feeds

Budgeting

Phone support

Monthly price

$39.60

$32.00

Reckon One’s unlimited free phone support is available during business hours. This table is in comparison to Zoho Books ‘Professional’ plan. Data correct as of July 2024.

Better value as your business grows

Unlimited invoices

Payroll software for your employees

including Single Touch Payroll

Unlimited users

so you can share your files with the right people.

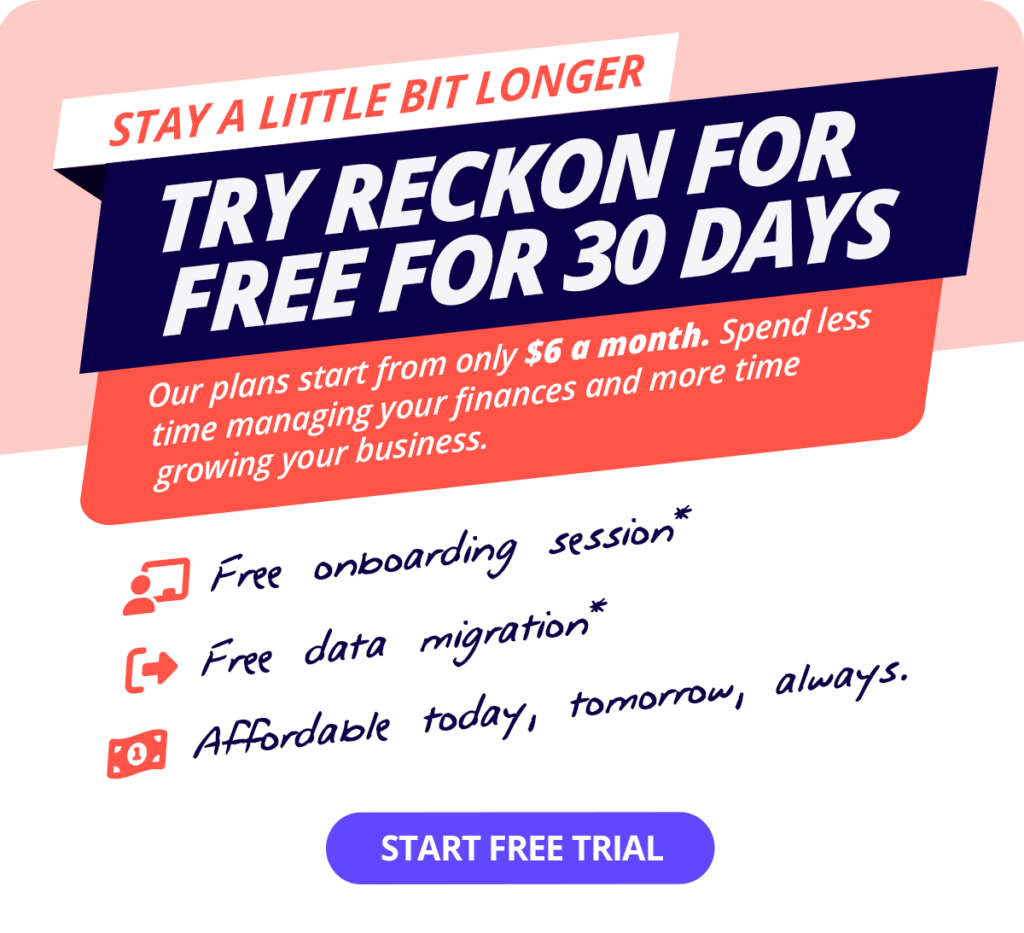

Migrate from Zoho to Reckon for free

Moving your data from Zoho to Reckon is quick and easy with our migration service. It’s free when you sign up for Reckon Plus or Premium plans.

Affordable payroll for all team sizes

Zoho does not include payroll features in Australia

If you want to manage payroll, you will need another platform as this feature is not supported on Zoho. Using more than one platform for accounting software and payroll is not only inconvenient, but it can be very expensive too!

…While Reckon One starts from just $12/month

Even when you scale up the size of your team, you’ll only pay $24/month to manage payroll and send Single Touch Payroll reports!

Choose Reckon One and enjoy more features, for less!

There are a million reasons to love Reckon

Convinced Reckon One is the perfect Zoho alternative for small businesses?

Try Reckon One for free, cancel anytime.