Looking for a Xero alternative?

Get better bang for your buck with Reckon accounting software

Reckon One pricing starts from only $12/month, Xero starts from $35!

Get more features for less

Xero ‘Starter’ plan vs Reckon ‘Accounting Plus’ plan

Track GST & BAS

Cash flow reporting

Create budgets

35+ insightful reports

Bank accounts feeds

Create & send invoices

20 invoices only

Bills

5 bills only

iOS & Android invoice app

Free phone support

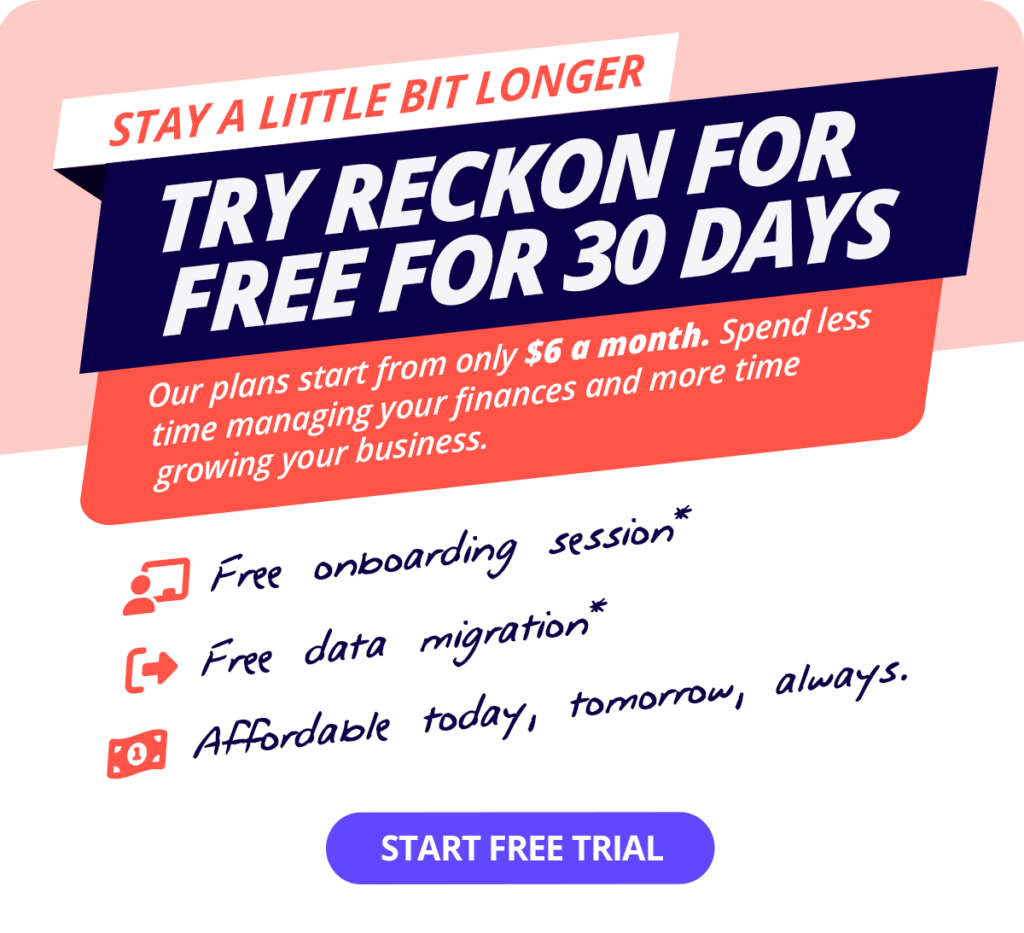

Free onboarding session

Monthly price

$35.00

$20.00

This online software comparison table includes data from Xero’s ‘Ignite’ plan and Reckon’s ‘Accounting Plus’ plan. Reckon vs Xero accounting data correct as of July 2024. Reckon’s free phone customer support is available during business hours.

Better value online accounting software as your business grows

Unlimited invoices

Reckon accounting plans include unlimited invoices so you pay the one low flat fee no matter how many you need to send! The perfect alternative to Xero, who cap the invoices allowed.

Payroll software to suit your business needs

If you need Payroll, Reckon charges $12/month for up to 4 employees (which includes a user friendly free mobile app). On Xero, you have to be on a $90/month plan to include 4 employees. Ouch!

Unlimited users & team members

Admin team members that have logins for the product are unlimited on Reckon. So you can invite anyone in your team, your accountant and family members to log in for no extra cost.

Migrate from Xero to Reckon for free

Moving your data from Xero to Reckon is quick and easy with our migration service. It’s free when you sign up for Reckon Plus or Premium plans.

Affordable accounting for all team sizes

Beware of the steep increase between plans on Xero. Reckon provides ongoing value that lasts.

Xero starts at $35 per month*

- But if you send more than 20 invoices a month, you’ll be charged $70 per month*.

- Add 1 employee, you’ll be charged $70 per month.

- Add 4 employees, you’ll be charged $90 per month. Ouch!

- Want to manage expense tracking? Beware to get Xero Expenses for 1 employee you must be on their $70/month plan and any additional employees are an $5 extra per user per month!

All these additional costs add up for small businesses. That’s why Reckon is the ideal Xero alternative.

While Reckon starts from just $12 per month

- Our Invoice package includes unlimited invoices

- Our payroll package includes payroll for up to 4 employees for just $12/month

- Admin users logging into your account are unlimited

So you can be confident that you won’t experience a steep increase in your accounting software as your business and cash flow grow.

* Pricing listed includes Xero’s 1 July 2024 price increase and new plan names. Monthly cost of Xero ‘Grow’ and ‘Comprehensive’ plans. Pricing lists at the standard price, correct as of June 2024.

Fairly priced, real customer service and a great product. I'm so happy to have left xero.

Harry

There are a million reasons to love Reckon

Convinced Reckon One is the perfect Xero alternative for small businesses?

Try Reckon One for free, cancel anytime.