2024 Federal Budget Update

Understand the key takeaways from the May 2024 Federal Budget and how it will affect your business.

What is the budget?

The Australian Federal Budget is prepared by the Treasurer and presented to Parliament. The budget outlines the proposed revenue and spending of the Australian Government for the upcoming financial year. The Federal Budget is also a political statement of the government’s intentions and priorities for the upcoming financial year and beyond.

The majority of government revenue comes from income & company tax, sales tax (GST), and interest and dividends. The government needs this revenue to pay for the public goods and services it provides, including, education, health, defence, infrastructure, welfare, and much more.

The small business budget wish list

What did the May 2024 budget deliver?

The 2024 Federal Budget was largely focused on the cost of living and inflation mitigation measures. While not a heavily SME focused Budget, there was still plenty in there for Australian small businesses. Here are the key takeaways from the latest Budget announcement that may affect you and your business.

The latest resources to help you manage the budget

The 2024 Federal Budget recap for small businesses

Read more >

Getting your head around the constantly shifting instant asset tax write off

Read more >

What are Australian businesses looking for in the upcoming 2024 Federal Budget?

Read more >

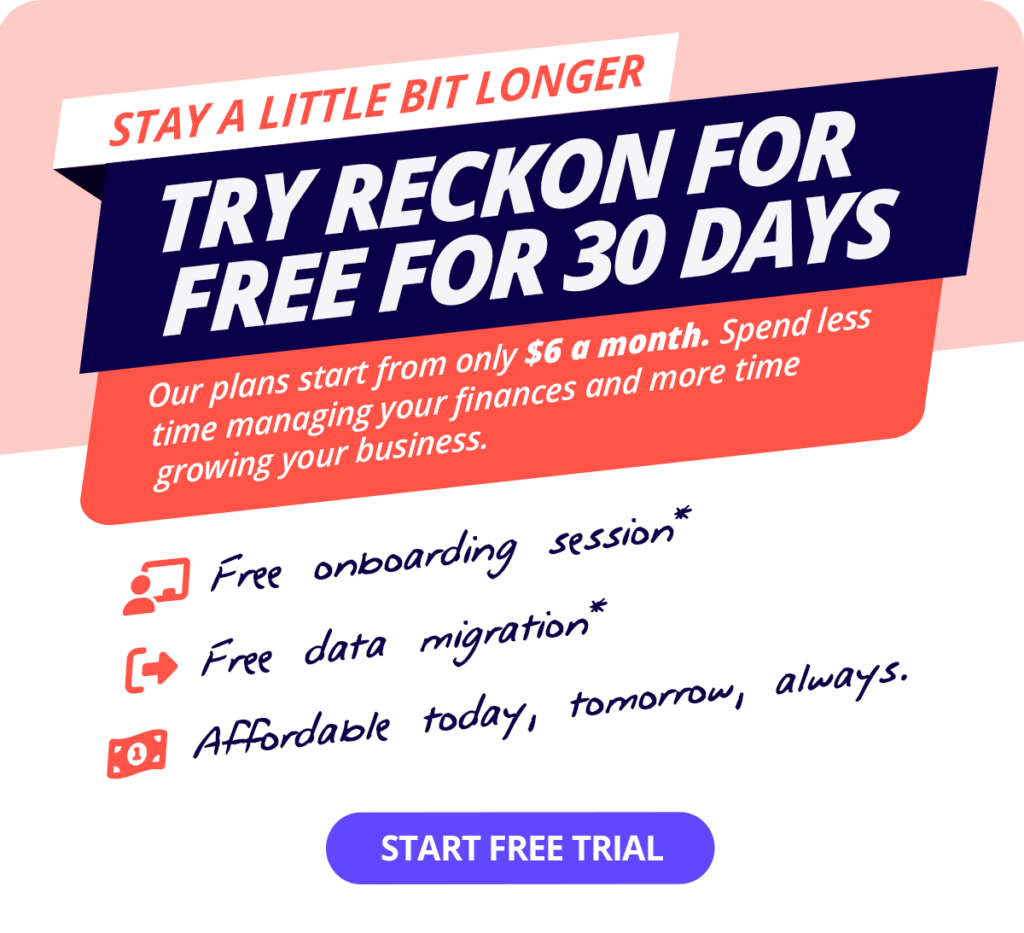

You’ll always be ready for tax time with Reckon One!

Take control of your finances in the new financial year.

Cancel anytime.